AI Human Impact Example:

OkCupid & the Principle of Dignity

OkCupid doesn’t really know what it’s doing. Neither does any other website. It’s not like people have been building these things for very long, or you can go look up a blueprint or something. Most ideas are bad. Even good ideas could be better. Experiments are how you sort all this out.

We noticed that people didn’t like it when Facebook “experimented” with their news feed. But guess what, everybody: if you use the Internet, you’re the subject of hundreds of experiments at any given time, on every site. That’s how websites work.

- Chris Rudder, OKCupid Blog

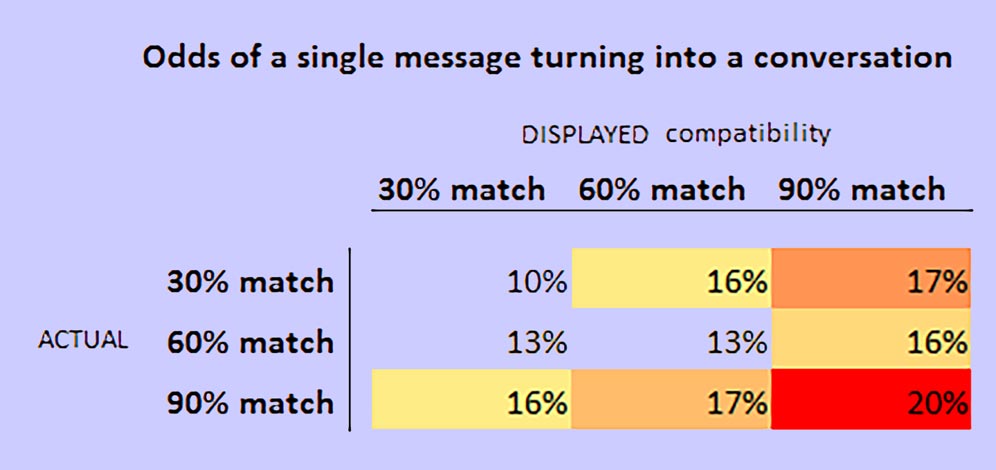

In one of those experiments, users who the OkCupid algorithms determined to be incompatible were told the opposite. When they connected, their interaction was charted by the platform’s standard metrics: how many times did they message each other, with how many words, over how long a period, and so on. Then their relationship success was compared against pairs who were judged truly compatible. Presumably, the test measured the power of positive suggestion: Do incompatible users who are told they are compatible relate with the same success as true compatibles?

The answer is not as interesting as the users’ responses. One asked, “What if the manipulation is used for what you believe does good for the person?”

Human dignity is idea that individuals and their projects are valuable in themselves, and merely not as instruments or tools in the projects of others. Within AI Human Impact, the question dignity poses to OKCupid is: Are the romance-seekers being manipulated in experiments for their ultimate benefit because the learnings will result in a better platform and higher likelihood of romance? Or, is the manipulation about the platform’s owners and their marginally perverse curiosities?

The question’s answer is a step toward a dignity score for the company and, by extension, its parent: Match Group (Match, Tinder, Hinge …)

AI Human Impact Example:

[Redacted] & the Principle of Solidarity

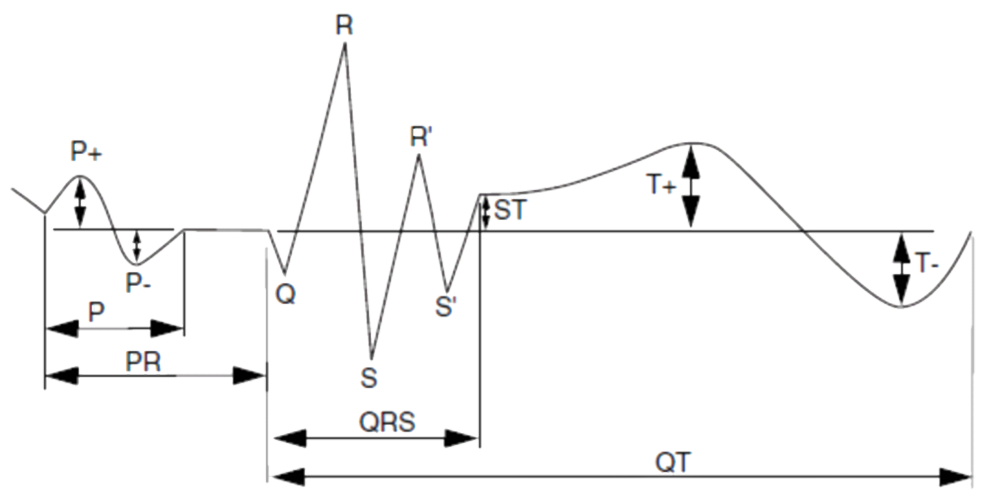

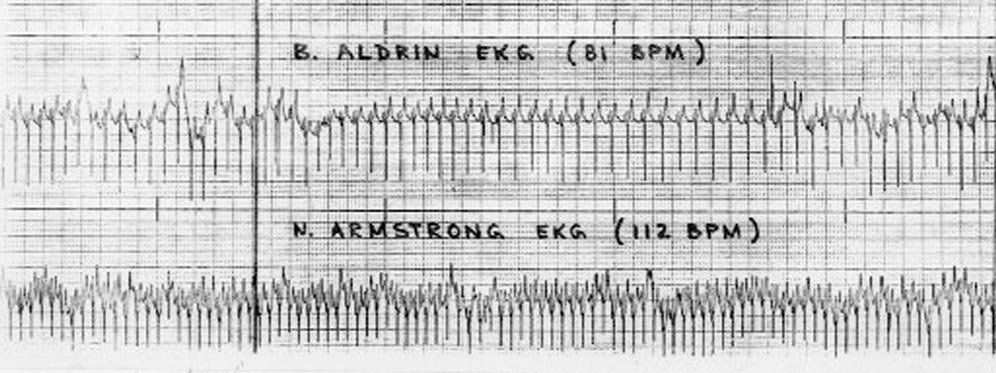

In 2020, an international team of philosophers, data scientists, and medical doctors performed a robust ethics evaluation on an existing, deployed, and functioning AI medical device. The team included James Brusseau who directs AI Human Impact. A nondisclosure agreement with the AI medical device manufacturer will be honored here, but in broad terms the AI is a proprietary algorithm that analyzes electrocardiograms for patterns associated with impending coronary disease. The task is ideal for artificial intelligence, which is knowledge produced by pattern recognition in large datasets, like the many measurements attributable to a heartbeat, multiplied by a long string of beats. Conveniently, efficiently, and inexpensively, the machine filters for tiny deviations and so promises to improve the quality of patients’ lives by discerning the probability of impending heart disease.

In AI medicine, because the biology of genders and races differ, there arises the risk that a diagnostic or treatment may function well for some groups while failing for others. As it happened, the machine centering our evaluation was trained on data which was limited geographically, and not always labeled in terms of gender or race. Because of the geography, it seemed possible that some races may have been over- or underrepresented in the training data.

A solidarity concern arises here. In ethics, solidarity is the social inclusiveness imperative that no one be left behind. Applied to the AI medical device, the solidarity question is: should patients from the overrepresented race(s) wait to use the technology until other races have been verified as fully included in the training data? A strict solidarity posture could respond affirmatively, while a flexible solidarity would allow use to begin so long as data gathering for underrepresented groups also initiated. Limited or absent solidarity would be indicated by neglect of potential users, possibly because a cost/benefit analysis returned a negative result, meaning some people get left behind because it is not worth the expense of training the machine for their narrow or outlying demographic segment.

In AI Human Impact, a positive solidarity score would be assigned to the company were it to move aggressively toward ensuring an inclusive training dataset.

AI Human Impact Example:

Lemonade & the Principle of Fairness

Daniel Schreiber, the co-founder and CEO of the New York-based insurance startup Lemonade shares concerns that the increased use of machine-learning algorithms, if mishandled, could lead to “digital redlining,” as some consumer and privacy right advocates fear.

To ensure that an AI-led underwriting process is fair, Schreiber promotes the use of a "uniform loss ratio." If a company is engaging in fair underwriting practices, its loss ratio, or the amount it pays out in claims divided by the amount it collects in premiums, should be constant across race, gender, sexual orientation, religion and ethnicity.

What counts as fairness? According to the original Aristotelian dictate, it is treating equals equally, and unequals unequally.

The rule can be applied to individuals, and to groups. For individuals, fairness means clients who present unequal risk should receive unequal treatment: whether the product is car, home, or health insurance, the less likely a customer is to make a claim, the lower the premium that ought to be charged.

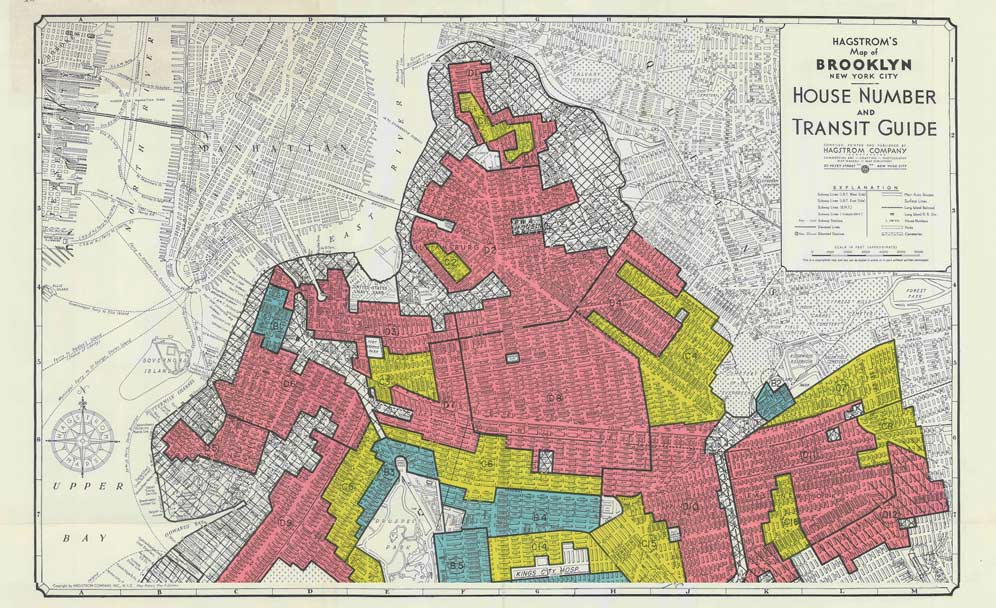

In Brooklyn New York, individual fairness starts with statistical reality: a condominium owner in a doorman building in the ritzy and well-policed Brooklyn Heights neighborhood (home to Matt Damon, Daniel Craig, Rachel Weisz…) is less likely to be robbed than a ground-floor apartment in the gritty Bedford-Stuyvesant neighborhood. That risk differential justifies charging the Brooklyn Heights owner less to insure more valuable possessions than the workingman out in Bed-Stuy.

What the startup insurance company Lemonade noticed – and they are not the only ones – is that premium disparities in Brooklyn neighborhoods tended to correspond with racial differences. The company doesn’t disclose its data, but the implication from the article published in Fortune is that being fair to individuals leads to apparent unfairness when the customers are grouped by race. Blacks who largely populate Bed-Stuy can reasonably argue that they’re getting ripped-off since they pay relatively more than Brooklyn Heights whites for coverage.

Of course, insurance disparities measured across groups are common. Younger people pay more than older ones, and men more than women for the same auto insurance. What is changing, however, is that the hyper-personalization of AI analysis, combined with big data filtering that surfaces patterns in the treatment of racial, gender, and other identity groups, is multiplying and widening the gaps between individual and group equality. More and more, being fair to each individual is creating visible inequalities on the group level.

What Lemonade proposed to confront this problem is an AI-enabled shift from individual to group fairness in terms of loss ratios (the claims paid by the insurer divided by premiums received). Instead of applying the same ratio to all clients, it would be calibrated for equality across races, genders, and similarly protected demographics. The loss ratio used to calculate premiums for all whites would be the same one applied to other races. Instead of individuals, now it is identity groups that will be charged higher or lower premiums depending on whether they are more or less likely to make claims. (Note: Individuals may still be priced individually, but under the constraint that their loss ratio averaged with all others in their demographic equals that of other demographics.)

This is good for Lemonade because it allows them to maintain that they treat racial and gender groups equally since their quoted insurance prices all derive from a single loss ratio applied equally to the groups. It is also good for whites who live in Bud-Stuy because they now get incorporated into the rates set for Matt Damon and the rest inhabiting well protected and policed neighborhoods. Of course, where there are winners, there are also losers.

More broadly, here is an incomplete set of fairness applications:

-

Rates can be set individually to correspond with risk that a claim will be made: every individual is priced by the same loss ratio, and so pays an individualized insurance premium, with a lower rate reflecting lower risk.

-

Rates can be set for groups to correspond with total claims predicted to be made by its collected members: demographic segments are priced by the same loss ratio. But, different degrees of risk corresponding with the diverse groups results in the payments of diverse premiums for the same coverage. This occurs today in car insurance where young males pay higher premiums than young females because they have more accidents. The fairness claim is that even though the two groups are being treated differently is terms of premiums, they are treated the same in terms of the profit they generate for the insurer.

-

Going to the other extreme, rates can be set at a single level across the entire population: everyone pays the same price for the same insurance regardless of their circumstances and behaviors, which means that every individual incarnates a personalized loss ratio.

It is difficult to prove one fairness application preferable to another, but there is a difference between better and worse understandings of the fairness dilemma. AI Human Impact scores fairness in terms of that understanding – in terms of how deeply a company engages with the dilemma – and not in terms of adherence to one or another definition.

AI Human Impact Example:

Tesla & the Principle of Safety

The Model S was on a divided highway with Autopilot engaged when a tractor trailer drove across the highway perpendicular to the car. Neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied. The high ride height of the trailer combined with its positioning across the road and the extremely rare circumstances of the impact caused the Model S to pass under the trailer, with the bottom of the trailer impacting the windshield of the Model S.

This is the first known fatality in just over 130 million miles where Autopilot was activated. Among all vehicles in the US, there is a fatality every 94 million miles. Worldwide, there is a fatality approximately every 60 million miles.

The customer who died in this crash had a loving family and we are beyond saddened by their loss. He was a friend to Tesla and the broader EV community, a person who spent his life focused on innovation and the promise of technology and who believed strongly in Tesla’s mission. We would like to extend our deepest sympathies to his family and friends.

- Tesla Team, A Tragic Loss, Tesla Blog

This horror movie accident represents a particular AI fear: a machine capable of calculating pi to 31 trillion digits cannot figure out to stop when a truck crosses in front. The power juxtaposed with the debility seems ludicrous, as though the machine completely lacks common sense which, in fact, is precisely what it does lack.

For human users, one vertiginous effect of the debility is no safe moment. As with any mechanism, AIs come with knowable risks and dangers that can be weighed, compared, accepted or rejected, but it is beyond that, in the region of unknowable risks – especially those that strike as suddenly as they are unexpected – that trust in AI destabilizes. So, any car accident is worrisome, but this is terrifying: a report from the European Parliament found that the Tesla AI mistook the white tractor trailer crossing in front for the sky, and did not even slow down.

So, how is safety calculated in human terms? As miles driven without accidents? That’s the measure Tesla proposed, but it doesn’t account for the unorthodox perils of AI. Accounting for them is the task of an AI ethics evaluation.